In a recent post (Breakpoints with Side Effects) I wrote about breakpoints with side effects. One of the side effects that I mentioned was the ability to ignore all subsequent exceptions, as well as restore the normal response of the debugger to encountered exceptions (pause the execution of your code).

In response, a reader posted a comment asking how to instruct the debugger to ignore exceptions, particularly those that cannot be anticipated. He wrote "when debugging unit-tests that have to trigger exceptions ... I'm not interested in the expected exceptions, I'm interested in the unexpected ones! Having the debugger break on them all, can be quite inconvenient."

Fortunately, Delphi and its debugger provide you with a number of mechanisms for ignoring exceptions. These techniques can be divided into two general categories. The first is to disable breaking on exceptions, even though the debugger is active. The second is to run your code without activating the debugger (but with the option to active it at runtime). Let's begin with the first of these two approaches.

Disabling Exception Breaking

When you are running your application from the Delphi IDE, and the debugger is active, there are a number of ways to instruct the debugger to ignore exceptions. And by "ignore," I mean to instruct the debugger to not pause execution of your application when an exception is encountered.

Under normal circumstances, when you are running an application with the debugger active, an encountered exception causes the debugger to pause the execution of your code and display a dialog box describing the exception that occurred. This happens whether or not the exception is caught by an exception block.

For example, consider this overly simplistic code segment.

var

i, j, k, l, m: Integer;

begin

i := 23;

j := 0;

l := 2;

m := 4;

try

k := i div j * (l div m);

except

k := 0;

end;

ShowMessage(IntToStr(k));

In this code segment, an EDivByZero exception is raised within the try block. But even though that exception is handled by an except clause, if you are running this code from the IDE with the debugger active, the debugger will pause your code, and display a message like the one shown in the following figure.

Figure 1. An exception has caused the debugger to pause execution

At this point, you have the option of selecting Break, which places you in debug mode, or selecting Continue, which resumes the execution of your code. The point here is that even though the exception is already handled by your code, the debugger has taken upon itself to get involved and pause the execution of your program.

The problem is even worse if the exception is not raised within the try block of a try-except. In those cases, selecting Continue causes the program to resume, at which point it immediately displays the exception in the default exception handler dialog box, the one intended to display the exception to the end user.

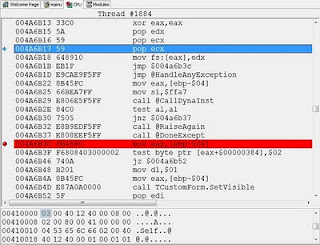

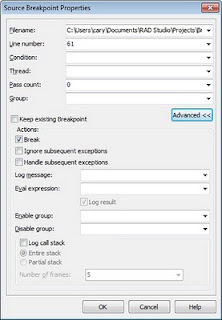

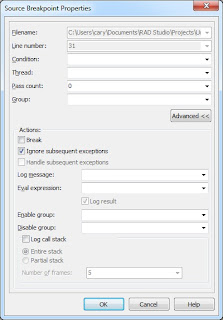

In situations where you do not want the debugger to pause the execution of your code in response to an exception, you have several options. As described in my previous article, you can set a non-breaking breakpoint at some point in your code prior to the execution of the code segment that raises the exception, and use the Ignore subsequent exceptions Advanced breakpoint option, shown in Figure 2, to instruct the debugger to ignore the exception.

Figure 2. The Breakpoint properties dialog box in Advanced mode

If you want to restored the normal operation of the debugger, you would then place a breakpoint downstream from the exception you want it to ignore and place another non-breaking breakpoint, and set its Advanced breakpoint property to Handle subsequent exceptions.

This approach, while effective, is time consuming to put into place. It requires you to anticipate those locations in your code where you want to ignore exceptions, and place corresponding pairs of non-breaking breakpoints on either side of these code segments to ignore and then to once again handle exceptions.

Ignoring Specific Exceptions

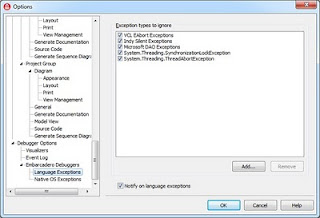

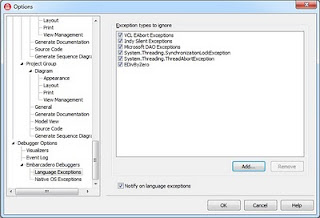

If you are only concerned about specific exceptions, there is a much easier alternative, which first became available in Delphi 2005. From the Language Exceptions node of the Options dialog box, shown in Figure 3, you can instruct the debugger to globally ignore specific exception hierarchies.

Figure 3. The Language Exceptions node of the Options dialog box

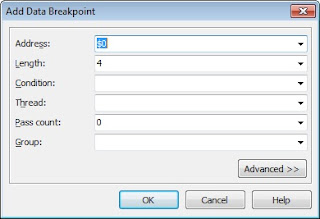

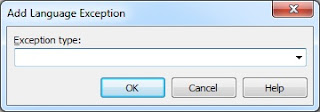

To do this, click the Add button to display the Add Language Exception dialog box, shown in figure 4. and add the name of the exception class that you want to ignore.

Figure 4. The Add Language Exception dialog box

Once added, this exception appears in the Exception Types to Ignore list of the Language Exceptions node of the Options dialog box. For instance, if you use the Add Language Exception dialog box to add an exception named EDivByZero, the Exceptions types to Ignore list will include EDivbyZero, as shown in Figure 5.

Figure 5. A newly added exception hierarchy will be ignored by the debugger

If you now run the previous code segment, the debugger will not pause your code upon encountering the division by zero exception. Likewise, it will also ignore any exceptions that descend from EDivByZero, meaning that this mechanism makes it easy to disable entire hierarchies of exceptions.

If, at a later time, you want to re-enable the debugger's normal behavior with respect to this exception (as well as those that descend from it), display the Language Exceptions page node of the Options dialog box, and remove the checkmark from the checkbox next to EDivByZero. Alternatively, you can remove this exception class from the Exceptions to Ignore list altogether by selecting that exception in the list and clicking the Remove button.

If you want to instruct the debugger to ignore every exception that it encounters, the process is even easier. Simply uncheck the Notify on language exceptions checkbox that appears at the bottom of the Language Exceptions node of the Options dialog box. When this checkbox is unchecked, the debugger ignores all language exceptions. (This checkbox can be seen in Figures 3 and 5).

To be honest, it would be unusual to add an exception, such as EDivByZero, to the Exceptions to Ignore list. Instead, you are more likely to add custom exceptions, those that you define for your own application, to this list. Specifically, you are more likely to add exceptions that you explicitly raise within your application by calling the raise keyword.

In general, it is considered a poor programming practice to raise an instance of one of Delphi's exception classes in your code. Instead, you should define your own exceptions classes, and raise one of them.

For example, you may define two classes of exceptions that you potentially raise at runtime from the code within your application. One of these may be raised due to simple user errors, errors that the user needs to be informed about. The other may be serious failures, ones that you cannot handle normally.

Here is an example of such a declaration:

type

ECustomException class(Exception)

end;

EWarningException = class(ECustomException)

end;

ECriticalException = class(ECustomException)

end;

Assuming that an EWarningException is raised by your code in response to a user's invalid input, this exception is one that you probably do not want the debugger to notify you of (while you are debugging the application). As a result, you probably do not want the debugger to display a dialog box like the one shown in Figure 1.

The simple solution for this problem is to instruct the debugger to ignore EWarningException instances, which is so say that you instruct the debugger to ignore your non-critical exceptions. That's the real power of the Exceptions to Ignore list.

Running without Debugging

When the debugger is active, unless you take steps to disable exceptions, raised exceptions cause the debugger to pause the execution of your code. One approach to preventing the debugger from pausing the execution of your application upon encountering an exception is to run your code without the debugger being active.

There are two ways to do this. One is to first compile or build your application, and then to run it outside of the IDE. In other words, after compiling or building your project, follow this by executing the resulting EXE creating during compilation.

Since Delphi 2005, there has been an easier way. You can select Run Run Without Debugging, or simply press Ctrl-Shift-F9. In Delphi XE it's even easier, since there is a Run Without Debugging button on the Debug toolbar.

When you run without debugging, Delphi will first compile your executable, if necessary. It will then run your application, but without the services of the debugger. This means that all breakpoints are ignored (whether they are breaking or non-breaking), and exceptions do not load the debugger.

One advantage of running without debugging is that the editor features, such as code navigation, code insight, and all other features of the editor, are active when you run without debugging. This means that you can test your running application, and at the same time make changes to and inspect the project from which the application was compiled.

But what if you encounter a problem while running without debugging, and you want to enable the debugger in order to examine what's going on? Here again, Delphi offers a powerful option. You can attach the debugger to the running process (this option also was introduced in Delphi 2005). Once you have attached to the running process, the debugger will be active without your having to stop and restart your application.

To attach the debugger to an application that is running without debugging, select Run Attach to Process. Delphi responds by displaying the Attach to Process dialog box shown in Figure 6.

Figure 6. The Attach to Process dialog box

If you want to immediately pause the application with the debugger active, ensure that the Pause after attach checkbox is checked before you click the Attach button. Personally, I usually leave this option unchecked, since I probably do not want the debugger to pause the application until it encounters a breakpoint or an exception that it is not instructed to ignore. Nonetheless, when you are ready to attach, click the Attach button.

Once attached, it is as if you had run the application with the debugger active. At this point, editor features such as code insight and class navigation are once again unavailable. However, once you are done using the debugger, you have the option to select Run Detach from Program, to once again return to a state where your application is running without the services of the debugger.

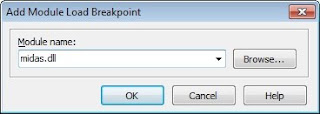

In closing, I want to mention one additional capability that the Attach to Process option provides you. If you are writing a DLL (dynamic link library) in the IDE, but this DLL is loaded by a process for which you do not have source code, you can use Attach to Process to debug your DLL.

Here's how you do it. When you are ready to debug your DLL, set your breakpoints and then compile the DLL program. Then, run the external program (the EXE) that will load your DLL.

Next, select Run Attach to Process. Use the Attach to Process dialog box to select the program that will load your DLL. Once you are attached, do whatever you need to do to cause that program to load your DLL. As soon as the attached process loads your DLL, and one of your breakpoints is encountered (or an exception is raised), Delphi's debugger will pause the execution inside your DLL source, permitting you to inspect variables, trace into, step over, or whatever else you need the debugger for. When you are done with the debugger, click the Run button or Press F9 to resume normal execution of the attached program.

Conclusion

While you need the services of Delphi's debugger, you do not necessarily need it all of the time. By learning how to instruct the debugger to ignore specific exceptions, as well as disable and enable particular breakpoints, you gain more control, and more precision, over your debugging tasks.

This posting is based on an article that I originally published in the Software Development Network Magazine (issue #107). This is the official magazine published by the Software Development Network (http://www.sdn.nl/).